The idea of this project is to create a system for training musical AI models in a web browser. In previous work we have developed a system of Python programs for training small ML models that predict musical gesture data. Our system was called IMPS for “Intelligent Music Prediction System”. Broadly speaking, we want to make this work in a web browser so that it can be accessible to a broad range of users and help create lots of new musical AI instruments.

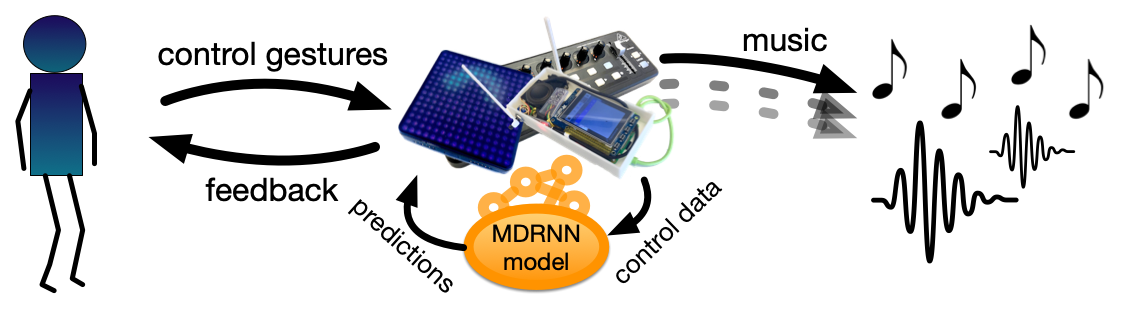

The challenge here is to engage with web-based deep learning systems such as TensorFlow.js and to help create something like “Teachable Machine” for music. You might start with implementing the MDRNN (mixture density recurrent neural network) model from IMPS and move on to other useful musical ML models.

The new system you will build should help users capture music data from a MIDI interface, train one of our off-the-shelf AI models, and then generate musical output. All of this should happen in a web browser and we will intend to use this work in future research projects with musicians.

This is a complex project and might involve multiple students working on different parts that will fit together.

As part of this project, you will conceptualise, create, and evaluate a musical system. You’ll need to be comfortable learning new languages and should enjoy working with physical hardware. It would be advantageous to have taken Sound and Music Computing and/or Human Computer Interaction.

You can take inspiration from some of our previous music tech projects that you can see here.

For an Honours/master project we would expect you to create a working prototype that includes an AI model and enables interactive sound or music to be created. You would need to complete some type of formal evaluation. This project could also be the basis of a wider PhD project.

Please read information about joining the Sound, Music, and Creative Computing Lab before applying for this project.

How to Apply

To apply for this project, contact Charles Martin.

Include:

- your CV

- your unofficial transcript (if you are an ANU student)

- a brief statement (200 words) in your email explaining how you would approach this project

Make sure to specify the skills and accomplishments you have that would help you to complete this project.

Useful Papers and Resources:

- An Interactive Musical Prediction System with Mixture Density Recurrent Neural Networks

- IMPS

- Intelligent Agents and Networked Buttons Improve Free-Improvised Ensemble Music-Making on Touch-Screens

- Can Machine Learning Apply to Musical Ensembles?

- Deep Models for Ensemble Touch-Screen Improvisation

- Vigliensoni et al. A small-data mindset for generative AI creative work