Introduction

Robots interact with human environments in diverse ways, undertaking tasks as varied as delivery, cleaning, and mapping, all while being equipped with powerful sensors. During operation, a robot might decide to capture imagery of the scene, and is therefore liable to record sensitive information, such as human faces and vehicle licence plates. Robotic data collection exposes the risk of privacy violations as an unwanted side-effect of increased deployment of robots. Alternatively, these privacy risks may prove as strong challenges to the widespread adoption of robotics in areas of public life. The future of robotics is therefore more implicated in questions surrounding the ethics of surveillance, privacy, and trust than has previously been recognized. It is imperative to investigate and develop privacy-preserving solutions in this domain.

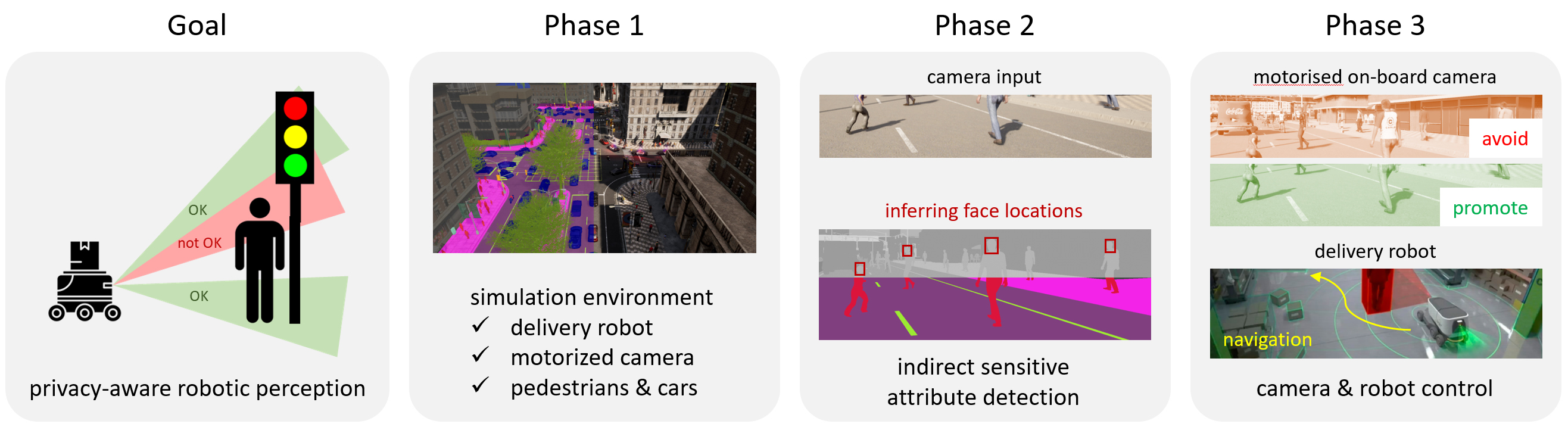

Existing solutions overlook robotic data collection as a site of intervention, and instead aim to secure data during post-processing, for example, by detecting and blurring licence plates. However, this is not a robust strategy. Raw data is susceptible to theft, corporate misuse or legally-enforced retrieval. Detection software is imperfect and subject to adversarial attack, and blurring or masking routines might sometimes leak information. In this project, we propose to develop camera control strategies for robots that actively avoid capturing sensitive data. This will necessitate making indirect inferences about the presence of sensitive areas in unobserved regions and considering the efficiency–privacy trade-offs for robot operation that such strategies may entail. This “actively avoid collection” paradigm, alongside the technological solutions developed in this project, will promote the responsible and secure adoption of robotic technologies in society, so that their beneficial outcomes do not come at the cost of privacy.

Keywords: privacy, simulation engine, AI, deep learning, computer vision, robotics

Research Objectives:

- To create a simulated environment for testing privacy-preserving robotic perception algorithms.

- To develop an indirect detection approach that can infer the presence of pre-specified sensitive attributes (e.g., faces or licence plates) from visual evidence without observing the regions.

- To learn control policies for a robot undertaking tasks while actively avoiding the capture of sensitive data.

Requirements:

We are looking for motivated students with a strong interest in computer vision and robotics.

- C++/Python

- Pytorch

- experience in deep learning, computer vision, or simulation engine (CARLA).

Team

Yunzhong Hou is a Research Fellow with the School of Computing. His research is on camera controls and optimizations and connecting 2D to 3D with multi-view systems.

Dylan Campbell is a Lecturer at the ANU School of Computing with a PhD in computer vision from ANU. His particular expertise is in 3D vision, with work of relevance to this project on camera pose estimation winning the prestigious Marr Prize Honourable Mention.

Rahul Shome is a Lecturer at the ANU School of Computing. He obtained his PhD from Rutgers University and was a Rice Academy Fellow at Rice University. He has been recognised with awards at international robotics conferences. He is an expert in robot planning algorithms and has ongoing focus on human factors in robot reasoning including elements of privacy.

Michael Barnes is a Research Fellow in the School of Philosophy at ANU. His PhD is from Georgetown University. His primary research concerns the ethics of emerging technology, with a focus on communications technologies.

Contact

For more details, please email Yunzhong.